Corner/point features

Image feature detection, description and matching is key to many algorithms used multi-view geometry. The key steps are:

Extract feature points

Find point (corner) features in a grey-scale image.

- class machinevisiontoolbox.ImagePointFeatures.ImagePointFeaturesMixin[source]

- SIFT(**kwargs)[source]

Find SIFT features in image

- Parameters

kwargs – arguments passed to OpenCV

- Returns

set of 2D point features

- Return type

Returns an iterable and sliceable object that contains SIFT features and descriptors.

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> sift = img.SIFT() >>> len(sift) # number of features 2986 >>> print(sift[:5]) SIFTFeature features, 5 points

- References

Distinctive image features from scale-invariant keypoints. David G. Lowe Int. J. Comput. Vision, 60(2):91–110, November 2004.

Robotics, Vision & Control for Python, Section 14.1, P. Corke, Springer 2023.

- Seealso

- ORB(scoreType='harris', **kwargs)[source]

Find ORB features in image

- Parameters

kwargs – arguments passed to OpenCV

- Returns

set of 2D point features

- Return type

Returns an iterable and sliceable object that contains 2D features with properties.

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500 >>> print(orb[:5]) ORBFeature features, 5 points

- Seealso

:func:ORBFeature`, cv2.ORB_create

- BRISK(**kwargs)[source]

Find BRISK features in image

- Parameters

kwargs – arguments passed to OpenCV

- Returns

set of 2D point features

- Return type

Returns an iterable and sliceable object that contains BRISK features and descriptors.

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> brisk = img.BRISK() >>> len(brisk) # number of features 4978 >>> print(brisk[:5]) BRISKFeature features, 5 points

- References

Brisk: Binary robust invariant scalable keypoints. Stefan Leutenegger, Margarita Chli, and Roland Yves Siegwart. In Computer Vision (ICCV), 2011 IEEE International Conference on, pages 2548–2555. IEEE, 2011.

- Seealso

- AKAZE(**kwargs)[source]

Find AKAZE features in image

- Parameters

kwargs – arguments passed to OpenCV

- Returns

set of 2D point features

- Return type

Returns an iterable and sliceable object that contains AKAZE features and descriptors.

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> akaze = img.AKAZE() >>> len(akaze) # number of features 1445 >>> print(akaze[:5]) AKAZEFeature features, 5 points

- References

Fast explicit diffusion for accelerated features in nonlinear scale spaces. Pablo F Alcantarilla, Jesús Nuevo, and Adrien Bartoli. Trans. Pattern Anal. Machine Intell, 34(7):1281–1298, 2011.

- Seealso

- Harris(**kwargs)[source]

Find Harris features in image

- Parameters

nfeat (int, optional) – maximum number of features to return, defaults to 250

k (float, optional) – Harris constant, defaults to 0.04

scale (int, optional) – nonlocal minima suppression distance, defaults to 7

hw (int, optional) – half width of kernel, defaults to 2

patch (int, optional) – patch half width, defaults to 5

- Returns

set of 2D point features

- Return type

Harris features are detected as non-local maxima in the Harris corner strength image. The descriptor is a unit-normalized vector image elements in a \(w_p \times w_p\) patch around the detected feature, where \(w_p = 2\mathtt{patch}+1\).

Returns an iterable and sliceable object that contains Harris features and descriptors.

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> harris = img.Harris() >>> len(harris) # number of features 3541 >>> print(harris[:5]) HarrisFeature features, 5 points

Note

The Harris corner detector and descriptor is not part of OpenCV and has been custom written for pedagogical purposes.

- References

A combined corner and edge detector. CG Harris, MJ Stephens Proceedings of the Fourth Alvey Vision Conference, 1988 Manchester, pp 147–151

- Robotics, Vision & Control for Python, Section 12.3.1,

Corke, Springer 2023.

- Seealso

- ComboFeature(detector, descriptor, det_opts, des_opts)[source]

Combination feature detector and descriptor

- Parameters

detector (str) – detector name

descriptor (str) – descriptor name

det_opts (dict) – options for detector

des_opts – options for descriptor

- Returns

set of 2D point features

- Return type

BaseFeature2Dsubclass

Detect corner features using the specified detector

detectorand describe them using the specified descriptordescriptor. A large number of possible combinations are possible.Warning

Incomplete

- Seealso

BOOSTFeatureBRIEFFeatureDAISYFeatureFREAKFeatureLATCHFeatureLUCIDFeature

Feature matching

The match object performs and describes point feature correspondence.

- class machinevisiontoolbox.ImagePointFeatures.FeatureMatch(m, fv1, fv2, inliers=None)[source]

Create feature match object

- Parameters

m (list of tuples (int, int, float)) – a list of match tuples (id1, id2, distance)

fv1 (

BaseFeature2D) – first set of featuresfv2 (class:BaseFeature2D) – second set of features

inliers (array_like of bool) – inlier status

A

FeatureMatchobject describes a set of correspondences between two feature sets. The object is constructed from two feature sets and a list of tuples(id1, id2, distance)whereid1andid2are indices into the first and second feature sets.distanceis the distance between the feature’s descriptors.A

FeatureMatchobject:has a length, the number of matches it contains

can be sliced to extract a subset of matches

inlier/outlier status of matches

Note

This constructor would not be called directly, it is used by the

matchmethod of theBaseFeature2Dsubclass.- Seealso

BaseFeature2D.matchcv2.KeyPoint

- __getitem__(i)[source]

Get matches from feature match object

- Parameters

i (int or Slice) – match subset

- Raises

IndexError – index out of range

- Returns

subset of matches

- Return type

Match instance

Allow indexing, slicing or iterating over the set of matches

Example:

IndexError: too many indices for array: array is 1-dimensional, but 2 were indexed- Seealso

- __len__()[source]

Number of matches

- Returns

number of matches

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> len(matches) 39

- Seealso

- correspondence()[source]

Feture correspondences

- Returns

feature correspondences as array columns

- Return type

ndarray(2,N)

Return the correspondences as an array where each column contains the index into the first and second feature sets.

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.correspondence() array([[410, 335, 483, 475, 478, 294, 257, 418, 200, 477, 461, 448, 431, 490, 456, 446, 457, 334, 133, 56, 441, 254, 250, 460, 259, 497, 380, 399, 1, 287, 246, 67, 134, 140, 468, 306, 132, 496, 343], [360, 298, 448, 451, 450, 204, 233, 351, 149, 471, 408, 398, 347, 465, 389, 397, 459, 268, 96, 78, 404, 163, 235, 464, 194, 447, 324, 367, 168, 239, 217, 194, 65, 260, 468, 266, 21, 477, 389]])

- by_id1(id)[source]

Find match by feature id in first set

- Parameters

id (int) – id of feature in the first feature set

- Returns

match that includes feature

idor None- Return type

FeatureMatchinstance containing one correspondence

A

FeatureMatchobject can contains multiple correspondences which are essentially tuples (id1, id2) where id1 and id2 are indices into the first and second feature sets that were matched. Each feature has a position, strength, scale and id.This method returns the match that contains the feature in the first feature set with specific

id. If no such match exists it returns None.Note

For efficient lookup, on the first call a dict is built that maps feature id to index in the feature set.

Useful when features in the sets come from multiple images and

idis used to indicate the source image.

- Seealso

BaseFeature2DBaseFeature2D.idby_id2

- by_id2(i)[source]

Find match by feature id in second set

- Parameters

id (int) – id of feature in the second feature set

- Returns

match that includes feature

idor None- Return type

FeatureMatchinstance containing one correspondence

A

FeatureMatchobject can contains multiple correspondences which are essentially tuples (id1, id2) where id1 and id2 are indices into the first and second feature sets that were matched. Each feature has a position, strength, scale and id.This method returns the match that contains the feature in the second feature set with specific

id. If no such match exists it returns None.Note

For efficient lookup, on the first call a dict is built that maps feature id to index in the feature set.

Useful when features in the sets come from multiple images and

idis used to indicate the source image.

- Seealso

BaseFeature2DBaseFeature2D.idby_id1

- property status

Inlier status of matches

- Returns

inlier status of matches

- Return type

bool

- table()[source]

Print matches in tabular form

Each row in the table includes: the index of the match, inlier/outlier status, match strength, feature coordinates.

- Seealso

__str__

- property inliers

Extract inlier matches

- Returns

new match object containing only the inliers

- Return type

FeatureMatchinstance

Note

Inlier/outlier status is typically set by some RANSAC-based algorithm that applies a geometric constraint to the sets of putative matches.

- Seealso

CentralCamera.points2F

- property outliers

Extract outlier matches

- Returns

new match object containing only the outliers

- Return type

FeatureMatchinstance

Note

Inlier/outlier status is typically set by some RANSAC-based algorithm that applies a geometric constraint to the sets of putative matches.

- Seealso

entralCamera.points2F

- subset(N=100)[source]

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 10

- Returns

feature vector

- Return type

BaseFeature2D

Return

Nfeatures selected in constant steps from the input feature vector, ie. feature 0, s, 2s, etc.

- plot(*pos, darken=False, ax=None, width=None, block=False, **kwargs)[source]

Plot matches

- param darken

darken the underlying , defaults to False

- type darken

bool, optional

- param width

figure width in millimetres, defaults to Matplotlib default

- type width

float, optional

- param block

Matplotlib figure blocks until window closed, defaults to False

- type block

bool, optional

Displays the original pair of images side by side, as greyscale images, and overlays the matches.

- property distance

Distance between corresponding features

- Returns

_description_

- Return type

float or ndarray(N)

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.distance array([ 79. , 84.1368, 85.8662, 98.3514, 126.5701, 130.3917, 131.5637, 137.9203, 139.9607, 143.0385, 156.5056, 158.6474, 173.5022, 176.6579, 185.8817, 188.2817, 190.5597, 194.7871, 197.4411, 201.5912, 203.2511, 203.5829, 210.8317, 220.6944, 221.1493, 228.9978, 231.225 , 231.5016, 235.3593, 239.6915, 249.5416, 257.0117, 258.5962, 273.8211, 274.2827, 274.6252, 282.2959, 284.1707, 286.7211]) >>> matches[0].distance 79.0

- property p1

Feature coordinate in first image

- Returns

feature coordinate

- Return type

ndarray(2) or ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.p1 array([[783.821 , 710.2081, 730.9691, 673.6382, 845.6309, 824.2561, 704.16 , 709.1714, 825.1201, 834.8813, 806.2159, 839.0617, 806.2159, 885.0459, 785.314 , 845.0337, 689.7625, 758.5921, 788.4 , 960. , 827.1178, 745.92 , 817.92 , 722.6083, 828.0001, 784.7168, 756.8641, 823.6341, 717. , 711.9361, 732.96 , 828. , 828.0001, 927.6 , 758.4401, 755.136 , 747.6 , 458.6473, 806.6305], [539.9656, 416.448 , 541.0605, 537.4773, 533.8941, 639.36 , 591.84 , 418.0379, 639.36 , 530.3109, 415.0519, 531.5053, 413.0612, 623.4736, 540.4633, 537.4773, 650.9447, 646.272 , 532.8 , 683. , 641.9868, 540. , 590.4 , 621.0848, 532.8 , 537.4773, 644.8897, 641.9867, 707. , 589.248 , 544.32 , 747. , 532.8 , 690. , 644.9727, 539.136 , 540. , 548.2268, 748.5697]]) >>> matches[0].p1 array([783.821 , 539.9656])

- property p2

Feature coordinate in second image

- Returns

feature coordinate

- Return type

ndarray(2) or ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.p2 array([[678.0673, 613.4401, 636.0148, 585.2531, 728.5803, 714.2401, 609.12 , 613.7857, 715.2 , 727.3859, 694.2415, 721.613 , 694.6561, 764.4121, 676.8232, 729.0779, 597.197 , 658.08 , 681. , 828. , 714.148 , 645.6 , 705.6 , 627.0568, 714. , 677.8185, 658.368 , 713.3185, 571.2 , 614.88 , 633.6 , 714. , 714. , 239.04 , 659.9026, 652.32 , 646. , 419.2323, 676.8232], [719.5393, 613.4401, 719.6223, 719.6223, 716.6364, 807.84 , 764.64 , 613.7857, 805.2 , 716.6364, 612.1269, 711.6597, 611.7121, 794.272 , 719.1246, 716.6364, 818.1599, 815.04 , 713. , 845. , 808.7042, 718.8 , 763.2 , 791.2859, 712.8 , 719.6223, 813.8881, 808.7041, 874.8 , 763.2 , 722.88 , 712.8 , 713. , 853.92 , 812.1879, 720. , 720. , 748.885 , 719.1246]]) >>> matches[0].p2 array([678.0673, 719.5393])

- property descriptor1

Feature descriptor in first image

- Returns

feature descriptor

- Return type

ndarray(M) or ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.descriptor1 array([[124, 241, 58, ..., 2, 102, 35], [ 34, 149, 146, ..., 94, 189, 198], [ 1, 176, 59, ..., 130, 71, 251], ..., [180, 109, 248, ..., 243, 226, 41], [125, 212, 254, ..., 253, 125, 247], [ 60, 233, 123, ..., 187, 214, 115]], dtype=uint8) >>> matches[0].descriptor1 array([124, 241, 58, 104, 25, 205, 83, 252, 227, 136, 172, 8, 127, 219, 72, 48, 152, 249, 220, 112, 75, 35, 250, 4, 251, 221, 5, 123, 98, 2, 102, 35], dtype=uint8)

- property descriptor2

Feature descriptor in second image

- Returns

feature descriptor

- Return type

ndarray(M) or ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> matches = orb1.match(orb2) >>> matches.descriptor2 array([[124, 241, 59, ..., 2, 102, 35], [ 6, 133, 146, ..., 78, 189, 198], [ 32, 180, 59, ..., 130, 71, 250], ..., [181, 125, 235, ..., 243, 160, 249], [173, 164, 230, ..., 140, 235, 192], [124, 249, 122, ..., 162, 102, 99]], dtype=uint8) >>> matches[0].descriptor2 array([124, 241, 59, 96, 25, 205, 83, 252, 227, 136, 172, 8, 95, 223, 72, 48, 184, 249, 220, 112, 75, 35, 186, 4, 251, 221, 5, 127, 98, 2, 102, 35], dtype=uint8)

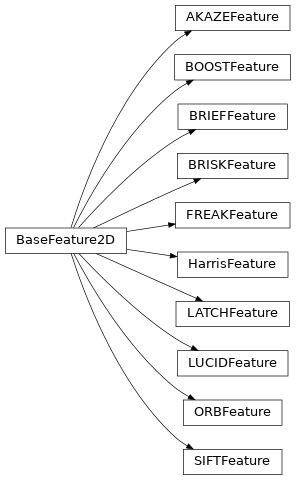

Feature representation

The base class for all point features.

- class machinevisiontoolbox.ImagePointFeatures.SIFTFeature(kp=None, des=None, scale=False, orient=False, image=None)[source]

Create set of SIFT point features

Create set of 2D point features

- Parameters

kp (list of N elements, optional) – list of

opencv.KeyPointobjects, one per feature, defaults to Nonedes (ndarray(N,M), optional) – Feature descriptor, each is an M-vector, defaults to None

scale (bool, optional) – features have an inherent scale, defaults to False

orient (bool, optional) – features have an inherent orientation, defaults to False

A

BaseFeature2Dobject:has a length, the number of feature points it contains

can be sliced to extract a subset of features

This object behaves like a list, allowing indexing, slicing and iteration over individual features. It also supports a number of convenience methods.

Note

OpenCV consider feature points as

opencv.KeyPointobjects and the descriptors as a multirow NumPy array. This class provides a more convenient abstraction.- __getitem__(i)

Get item from point feature object (base method)

- Parameters

i (int or slice) – index

- Raises

IndexError – index out of range

- Returns

subset of point features

- Return type

BaseFeature2D instance

This method allows a

BaseFeature2Dobject to be indexed, sliced or iterated.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> print(orb[:5]) # first 5 ORB features ORBFeature features, 5 points >>> print(orb[::50]) # every 50th ORB feature ORBFeature features, 10 points

- Seealso

- __len__()

Number of features (base method)

- Returns

number of features

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500

- Seealso

- property descriptor

Descriptor of feature

- Returns

Descriptor

- Return type

ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].descriptor.shape (32,) >>> orb[0].descriptor array([112, 44, 105, 97, 93, 249, 81, 39, 103, 229, 174, 153, 35, 45, 82, 20, 8, 166, 237, 28, 24, 106, 229, 62, 223, 197, 16, 123, 227, 12, 86, 126], dtype=uint8) >>> orb[:5].descriptor.shape (5, 32)

Note

For single feature return a 1D array vector, for multiple features return a set of column vectors.

- distance(other, metric='L2')

Distance between feature sets

- Parameters

other (

BaseFeature2D) – second set of featuresmetric (str, optional) – feature distance metric, one of “ncc”, “L1”, “L2” [default]

- Returns

distance between features

- Return type

ndarray(N1, N2)

Compute the distance matrix between two sets of feature. If the first set of features has length N1 and the

otheris of length N2, then compute an \(N_1 imes N_2\) matrix where element \(D_{ij}\) is the distance between feature \(i\) in the first set and feature \(j\) in the other set. The position of the closest match in row \(i\) is the best matching feature to feature \(i\).Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> dist = orb1.distance(orb2) >>> dist.shape (500, 500)

Note

The matrix is symmetric.

For the metric “L1” and “L2” the best match is the smallest distance

For the metric “ncc” the best match is the largest distance. A value over 0.8 is often considered to be a good match.

- Seealso

- drawKeypoints(image, drawing=None, isift=None, flags=4, **kwargs)

Render keypoints into image

- Parameters

image (

Image) – original imagedrawing (_type_, optional) – _description_, defaults to None

isift (_type_, optional) – _description_, defaults to None

flags (_type_, optional) – _description_, defaults to cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

- Returns

image with rendered keypoints

- Return type

Imageinstance

If

imageis None then the keypoints are rendered over a black background.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- filter(**kwargs)

Filter features

- Parameters

kwargs – the filter parameters

- Returns

sorted features

- Return type

BaseFeature2Dinstance

The filter is defined by arguments:

argument

value

select if

scale

(minimum, maximum)

minimum <= scale <= maximum

minscale

minimum

minimum <= scale

maxscale

maximum

scale <= maximum

strength

(minimum, maximum)

minimum <= strength <= maximum

minstrength

minimum

minimum <= strength

percentstrength

percent

strength >= percent * max(strength)

nstrongest

N

strength

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.filter(minstrength=0.001) >>> len(orb2) 407

Note

If

valueis a range thenumpy.Infor-numpy.Infcan be used as values.

- gridify(nbins, nfeat)

Sort features into grid

- Parameters

nfeat (int) – maximum number of features per grid cell

nbins (int) – number of grid cells horizontally and vertically

- Returns

set of gridded features

- Return type

BaseFeature2Dinstance

Select features such that no more than

nfeatfeatures fall into each grid cell. The image is divided into annbinsxnbinsgrid.Warning

Takes the first

nfeatfeatures in each grid cell, not thenfeatstrongest. Sort the features by strength to achieve this.- Seealso

- property id

Image id for feature point

- Returns

image id

- Return type

int or list of int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].id -1 >>> orb[:5].id array([-1, -1, -1, -1, -1])

Note

Defined by the

idattribute of the image passed to the feature detector

- match(other, ratio=0.75, crosscheck=False, metric='L2', sort=True, top=None, thresh=None)

Match point features

- Parameters

other (BaseFeature2D) – set of feature points

ratio (float, optional) – parameter for Lowe’s ratio test, defaults to 0.75

crosscheck (bool, optional) – perform left-right cross check, defaults to False

metric (str, optional) – distance metric, one of: ‘L1’, ‘L2’ [default], ‘hamming’, ‘hamming2’

sort (bool, optional) – sort features by strength, defaults to True

- Raises

ValueError – bad metric name provided

- Returns

set of candidate matches

- Return type

FeatureMatchinstance

Return a match object that contains pairs of putative corresponding points. If

crosscheckis True the ratio test is disabledExample:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> m = orb1.match(orb2) >>> len(m) 39

- Seealso

- property octave

Octave of feature

- Returns

scale space octave containing the feature

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].octave 0 >>> orb[:5].octave array([0, 0, 0, 0, 0])

- property orientation

Orientation of feature

- Returns

Orientation in radians

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].orientation 0.9509157556747451 >>> orb[:5].orientation array([0.9509, 1.4389, 1.3356, 5.0882, 1.5255])

- property p

Feature coordinates

- Returns

Feature centroids as matrix columns

- Return type

ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- plot(*args, ax=None, filled=False, color='blue', alpha=1, hand=False, handcolor='blue', handthickness=1, handalpha=1, **kwargs)

Plot features using Matplotlib

- Parameters

ax (axes, optional) – axes to plot onto, defaults to None

filled (bool, optional) – shapes are filled, defaults to False

hand (bool, optional) – draw clock hand to indicate orientation, defaults to False

handcolor (str, optional) – color of clock hand, defaults to ‘blue’

handthickness (int, optional) – thickness of clock hand in pixels, defaults to 1

handalpha (int, optional) – transparency of clock hand, defaults to 1

kwargs (dict) – options passed to

matplotlib.Circlesuch as color, alpha, edgecolor, etc.

Plot circles to represent the position and scale of features on a Matplotlib axis. Orientation, if applicable, is indicated by a radial line from the circle centre to the circumference, like a clock hand.

- property scale

Scale of feature

- Returns

Scale

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].scale 31.0 >>> orb[:5].scale array([31., 31., 31., 31., 31.])

- sort(by='strength', descending=True, inplace=False)

Sort features

- Parameters

by (str, optional) – sort by

'strength'[default] or'scale'descending (bool, optional) – sort in descending order, defaults to True

- Returns

sorted features

- Return type

BaseFeature2Dinstance

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> orb2 = orb.sort('strength') >>> orb2[:5].strength array([0.0031, 0.003 , 0.0029, 0.0027, 0.0025])

- property strength

Strength of feature

- Returns

Strength

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].strength 0.000677075469866395 >>> orb[:5].strength array([0.0007, 0.0009, 0.0031, 0.0008, 0.0013])

- subset(N=100)

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 100

- Returns

subset of features

- Return type

BaseFeature2Dinstance

Return

Nfeatures selected in constant steps from the input feature vector, ie. feature 0, s, 2s, etc.Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.subset(50) >>> len(orb2) 50

- support(images, N=50)

Find support region

- Parameters

images (

Imageor list ofImage) – the image from which the feature was extractedN (int, optional) – size of square window, defaults to 50

- Returns

support region

- Return type

Imageinstance

The support region about the feature’s centroid is extracted, rotated and scaled.

Example:

Note

If the features come from multiple images then the feature’s

idattribute is used to index intoimageswhich must be a list of Image objects.

- table()

Print features in tabular form

Each row is in the table includes: the index in the feature vector, centroid coordinate, feature strength, feature scale and image id.

- Seealso

str

- property u

Horizontal coordinate of feature point

- Returns

Horizontal coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].u 847.0 >>> orb[:5].u array([847., 717., 647., 37., 965.])

- property v

Vertical coordinate of feature point

- Returns

Vertical coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].v 689.0 >>> orb[:5].v array([689., 707., 690., 373., 691.])

- class machinevisiontoolbox.ImagePointFeatures.ORBFeature(kp=None, des=None, scale=False, orient=False, image=None)[source]

Create set of ORB point features

Create set of 2D point features

- Parameters

kp (list of N elements, optional) – list of

opencv.KeyPointobjects, one per feature, defaults to Nonedes (ndarray(N,M), optional) – Feature descriptor, each is an M-vector, defaults to None

scale (bool, optional) – features have an inherent scale, defaults to False

orient (bool, optional) – features have an inherent orientation, defaults to False

A

BaseFeature2Dobject:has a length, the number of feature points it contains

can be sliced to extract a subset of features

This object behaves like a list, allowing indexing, slicing and iteration over individual features. It also supports a number of convenience methods.

Note

OpenCV consider feature points as

opencv.KeyPointobjects and the descriptors as a multirow NumPy array. This class provides a more convenient abstraction.- __getitem__(i)

Get item from point feature object (base method)

- Parameters

i (int or slice) – index

- Raises

IndexError – index out of range

- Returns

subset of point features

- Return type

BaseFeature2D instance

This method allows a

BaseFeature2Dobject to be indexed, sliced or iterated.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> print(orb[:5]) # first 5 ORB features ORBFeature features, 5 points >>> print(orb[::50]) # every 50th ORB feature ORBFeature features, 10 points

- Seealso

- __len__()

Number of features (base method)

- Returns

number of features

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500

- Seealso

- property descriptor

Descriptor of feature

- Returns

Descriptor

- Return type

ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].descriptor.shape (32,) >>> orb[0].descriptor array([112, 44, 105, 97, 93, 249, 81, 39, 103, 229, 174, 153, 35, 45, 82, 20, 8, 166, 237, 28, 24, 106, 229, 62, 223, 197, 16, 123, 227, 12, 86, 126], dtype=uint8) >>> orb[:5].descriptor.shape (5, 32)

Note

For single feature return a 1D array vector, for multiple features return a set of column vectors.

- distance(other, metric='L2')

Distance between feature sets

- Parameters

other (

BaseFeature2D) – second set of featuresmetric (str, optional) – feature distance metric, one of “ncc”, “L1”, “L2” [default]

- Returns

distance between features

- Return type

ndarray(N1, N2)

Compute the distance matrix between two sets of feature. If the first set of features has length N1 and the

otheris of length N2, then compute an \(N_1 imes N_2\) matrix where element \(D_{ij}\) is the distance between feature \(i\) in the first set and feature \(j\) in the other set. The position of the closest match in row \(i\) is the best matching feature to feature \(i\).Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> dist = orb1.distance(orb2) >>> dist.shape (500, 500)

Note

The matrix is symmetric.

For the metric “L1” and “L2” the best match is the smallest distance

For the metric “ncc” the best match is the largest distance. A value over 0.8 is often considered to be a good match.

- Seealso

- drawKeypoints(image, drawing=None, isift=None, flags=4, **kwargs)

Render keypoints into image

- Parameters

image (

Image) – original imagedrawing (_type_, optional) – _description_, defaults to None

isift (_type_, optional) – _description_, defaults to None

flags (_type_, optional) – _description_, defaults to cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

- Returns

image with rendered keypoints

- Return type

Imageinstance

If

imageis None then the keypoints are rendered over a black background.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- filter(**kwargs)

Filter features

- Parameters

kwargs – the filter parameters

- Returns

sorted features

- Return type

BaseFeature2Dinstance

The filter is defined by arguments:

argument

value

select if

scale

(minimum, maximum)

minimum <= scale <= maximum

minscale

minimum

minimum <= scale

maxscale

maximum

scale <= maximum

strength

(minimum, maximum)

minimum <= strength <= maximum

minstrength

minimum

minimum <= strength

percentstrength

percent

strength >= percent * max(strength)

nstrongest

N

strength

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.filter(minstrength=0.001) >>> len(orb2) 407

Note

If

valueis a range thenumpy.Infor-numpy.Infcan be used as values.

- gridify(nbins, nfeat)

Sort features into grid

- Parameters

nfeat (int) – maximum number of features per grid cell

nbins (int) – number of grid cells horizontally and vertically

- Returns

set of gridded features

- Return type

BaseFeature2Dinstance

Select features such that no more than

nfeatfeatures fall into each grid cell. The image is divided into annbinsxnbinsgrid.Warning

Takes the first

nfeatfeatures in each grid cell, not thenfeatstrongest. Sort the features by strength to achieve this.- Seealso

- property id

Image id for feature point

- Returns

image id

- Return type

int or list of int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].id -1 >>> orb[:5].id array([-1, -1, -1, -1, -1])

Note

Defined by the

idattribute of the image passed to the feature detector

- match(other, ratio=0.75, crosscheck=False, metric='L2', sort=True, top=None, thresh=None)

Match point features

- Parameters

other (BaseFeature2D) – set of feature points

ratio (float, optional) – parameter for Lowe’s ratio test, defaults to 0.75

crosscheck (bool, optional) – perform left-right cross check, defaults to False

metric (str, optional) – distance metric, one of: ‘L1’, ‘L2’ [default], ‘hamming’, ‘hamming2’

sort (bool, optional) – sort features by strength, defaults to True

- Raises

ValueError – bad metric name provided

- Returns

set of candidate matches

- Return type

FeatureMatchinstance

Return a match object that contains pairs of putative corresponding points. If

crosscheckis True the ratio test is disabledExample:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> m = orb1.match(orb2) >>> len(m) 39

- Seealso

- property octave

Octave of feature

- Returns

scale space octave containing the feature

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].octave 0 >>> orb[:5].octave array([0, 0, 0, 0, 0])

- property orientation

Orientation of feature

- Returns

Orientation in radians

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].orientation 0.9509157556747451 >>> orb[:5].orientation array([0.9509, 1.4389, 1.3356, 5.0882, 1.5255])

- property p

Feature coordinates

- Returns

Feature centroids as matrix columns

- Return type

ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- plot(*args, ax=None, filled=False, color='blue', alpha=1, hand=False, handcolor='blue', handthickness=1, handalpha=1, **kwargs)

Plot features using Matplotlib

- Parameters

ax (axes, optional) – axes to plot onto, defaults to None

filled (bool, optional) – shapes are filled, defaults to False

hand (bool, optional) – draw clock hand to indicate orientation, defaults to False

handcolor (str, optional) – color of clock hand, defaults to ‘blue’

handthickness (int, optional) – thickness of clock hand in pixels, defaults to 1

handalpha (int, optional) – transparency of clock hand, defaults to 1

kwargs (dict) – options passed to

matplotlib.Circlesuch as color, alpha, edgecolor, etc.

Plot circles to represent the position and scale of features on a Matplotlib axis. Orientation, if applicable, is indicated by a radial line from the circle centre to the circumference, like a clock hand.

- property scale

Scale of feature

- Returns

Scale

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].scale 31.0 >>> orb[:5].scale array([31., 31., 31., 31., 31.])

- sort(by='strength', descending=True, inplace=False)

Sort features

- Parameters

by (str, optional) – sort by

'strength'[default] or'scale'descending (bool, optional) – sort in descending order, defaults to True

- Returns

sorted features

- Return type

BaseFeature2Dinstance

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> orb2 = orb.sort('strength') >>> orb2[:5].strength array([0.0031, 0.003 , 0.0029, 0.0027, 0.0025])

- property strength

Strength of feature

- Returns

Strength

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].strength 0.000677075469866395 >>> orb[:5].strength array([0.0007, 0.0009, 0.0031, 0.0008, 0.0013])

- subset(N=100)

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 100

- Returns

subset of features

- Return type

BaseFeature2Dinstance

Return

Nfeatures selected in constant steps from the input feature vector, ie. feature 0, s, 2s, etc.Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.subset(50) >>> len(orb2) 50

- support(images, N=50)

Find support region

- Parameters

images (

Imageor list ofImage) – the image from which the feature was extractedN (int, optional) – size of square window, defaults to 50

- Returns

support region

- Return type

Imageinstance

The support region about the feature’s centroid is extracted, rotated and scaled.

Example:

Note

If the features come from multiple images then the feature’s

idattribute is used to index intoimageswhich must be a list of Image objects.

- table()

Print features in tabular form

Each row is in the table includes: the index in the feature vector, centroid coordinate, feature strength, feature scale and image id.

- Seealso

str

- property u

Horizontal coordinate of feature point

- Returns

Horizontal coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].u 847.0 >>> orb[:5].u array([847., 717., 647., 37., 965.])

- property v

Vertical coordinate of feature point

- Returns

Vertical coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].v 689.0 >>> orb[:5].v array([689., 707., 690., 373., 691.])

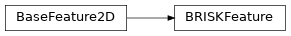

- class machinevisiontoolbox.ImagePointFeatures.BRISKFeature(kp=None, des=None, scale=False, orient=False, image=None)[source]

Create set of BRISK point features

Create set of 2D point features

- Parameters

kp (list of N elements, optional) – list of

opencv.KeyPointobjects, one per feature, defaults to Nonedes (ndarray(N,M), optional) – Feature descriptor, each is an M-vector, defaults to None

scale (bool, optional) – features have an inherent scale, defaults to False

orient (bool, optional) – features have an inherent orientation, defaults to False

A

BaseFeature2Dobject:has a length, the number of feature points it contains

can be sliced to extract a subset of features

This object behaves like a list, allowing indexing, slicing and iteration over individual features. It also supports a number of convenience methods.

Note

OpenCV consider feature points as

opencv.KeyPointobjects and the descriptors as a multirow NumPy array. This class provides a more convenient abstraction.- __getitem__(i)

Get item from point feature object (base method)

- Parameters

i (int or slice) – index

- Raises

IndexError – index out of range

- Returns

subset of point features

- Return type

BaseFeature2D instance

This method allows a

BaseFeature2Dobject to be indexed, sliced or iterated.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> print(orb[:5]) # first 5 ORB features ORBFeature features, 5 points >>> print(orb[::50]) # every 50th ORB feature ORBFeature features, 10 points

- Seealso

- __len__()

Number of features (base method)

- Returns

number of features

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500

- Seealso

- property descriptor

Descriptor of feature

- Returns

Descriptor

- Return type

ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].descriptor.shape (32,) >>> orb[0].descriptor array([112, 44, 105, 97, 93, 249, 81, 39, 103, 229, 174, 153, 35, 45, 82, 20, 8, 166, 237, 28, 24, 106, 229, 62, 223, 197, 16, 123, 227, 12, 86, 126], dtype=uint8) >>> orb[:5].descriptor.shape (5, 32)

Note

For single feature return a 1D array vector, for multiple features return a set of column vectors.

- distance(other, metric='L2')

Distance between feature sets

- Parameters

other (

BaseFeature2D) – second set of featuresmetric (str, optional) – feature distance metric, one of “ncc”, “L1”, “L2” [default]

- Returns

distance between features

- Return type

ndarray(N1, N2)

Compute the distance matrix between two sets of feature. If the first set of features has length N1 and the

otheris of length N2, then compute an \(N_1 imes N_2\) matrix where element \(D_{ij}\) is the distance between feature \(i\) in the first set and feature \(j\) in the other set. The position of the closest match in row \(i\) is the best matching feature to feature \(i\).Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> dist = orb1.distance(orb2) >>> dist.shape (500, 500)

Note

The matrix is symmetric.

For the metric “L1” and “L2” the best match is the smallest distance

For the metric “ncc” the best match is the largest distance. A value over 0.8 is often considered to be a good match.

- Seealso

- drawKeypoints(image, drawing=None, isift=None, flags=4, **kwargs)

Render keypoints into image

- Parameters

image (

Image) – original imagedrawing (_type_, optional) – _description_, defaults to None

isift (_type_, optional) – _description_, defaults to None

flags (_type_, optional) – _description_, defaults to cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

- Returns

image with rendered keypoints

- Return type

Imageinstance

If

imageis None then the keypoints are rendered over a black background.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- filter(**kwargs)

Filter features

- Parameters

kwargs – the filter parameters

- Returns

sorted features

- Return type

BaseFeature2Dinstance

The filter is defined by arguments:

argument

value

select if

scale

(minimum, maximum)

minimum <= scale <= maximum

minscale

minimum

minimum <= scale

maxscale

maximum

scale <= maximum

strength

(minimum, maximum)

minimum <= strength <= maximum

minstrength

minimum

minimum <= strength

percentstrength

percent

strength >= percent * max(strength)

nstrongest

N

strength

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.filter(minstrength=0.001) >>> len(orb2) 407

Note

If

valueis a range thenumpy.Infor-numpy.Infcan be used as values.

- gridify(nbins, nfeat)

Sort features into grid

- Parameters

nfeat (int) – maximum number of features per grid cell

nbins (int) – number of grid cells horizontally and vertically

- Returns

set of gridded features

- Return type

BaseFeature2Dinstance

Select features such that no more than

nfeatfeatures fall into each grid cell. The image is divided into annbinsxnbinsgrid.Warning

Takes the first

nfeatfeatures in each grid cell, not thenfeatstrongest. Sort the features by strength to achieve this.- Seealso

- property id

Image id for feature point

- Returns

image id

- Return type

int or list of int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].id -1 >>> orb[:5].id array([-1, -1, -1, -1, -1])

Note

Defined by the

idattribute of the image passed to the feature detector

- match(other, ratio=0.75, crosscheck=False, metric='L2', sort=True, top=None, thresh=None)

Match point features

- Parameters

other (BaseFeature2D) – set of feature points

ratio (float, optional) – parameter for Lowe’s ratio test, defaults to 0.75

crosscheck (bool, optional) – perform left-right cross check, defaults to False

metric (str, optional) – distance metric, one of: ‘L1’, ‘L2’ [default], ‘hamming’, ‘hamming2’

sort (bool, optional) – sort features by strength, defaults to True

- Raises

ValueError – bad metric name provided

- Returns

set of candidate matches

- Return type

FeatureMatchinstance

Return a match object that contains pairs of putative corresponding points. If

crosscheckis True the ratio test is disabledExample:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> m = orb1.match(orb2) >>> len(m) 39

- Seealso

- property octave

Octave of feature

- Returns

scale space octave containing the feature

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].octave 0 >>> orb[:5].octave array([0, 0, 0, 0, 0])

- property orientation

Orientation of feature

- Returns

Orientation in radians

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].orientation 0.9509157556747451 >>> orb[:5].orientation array([0.9509, 1.4389, 1.3356, 5.0882, 1.5255])

- property p

Feature coordinates

- Returns

Feature centroids as matrix columns

- Return type

ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- plot(*args, ax=None, filled=False, color='blue', alpha=1, hand=False, handcolor='blue', handthickness=1, handalpha=1, **kwargs)

Plot features using Matplotlib

- Parameters

ax (axes, optional) – axes to plot onto, defaults to None

filled (bool, optional) – shapes are filled, defaults to False

hand (bool, optional) – draw clock hand to indicate orientation, defaults to False

handcolor (str, optional) – color of clock hand, defaults to ‘blue’

handthickness (int, optional) – thickness of clock hand in pixels, defaults to 1

handalpha (int, optional) – transparency of clock hand, defaults to 1

kwargs (dict) – options passed to

matplotlib.Circlesuch as color, alpha, edgecolor, etc.

Plot circles to represent the position and scale of features on a Matplotlib axis. Orientation, if applicable, is indicated by a radial line from the circle centre to the circumference, like a clock hand.

- property scale

Scale of feature

- Returns

Scale

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].scale 31.0 >>> orb[:5].scale array([31., 31., 31., 31., 31.])

- sort(by='strength', descending=True, inplace=False)

Sort features

- Parameters

by (str, optional) – sort by

'strength'[default] or'scale'descending (bool, optional) – sort in descending order, defaults to True

- Returns

sorted features

- Return type

BaseFeature2Dinstance

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> orb2 = orb.sort('strength') >>> orb2[:5].strength array([0.0031, 0.003 , 0.0029, 0.0027, 0.0025])

- property strength

Strength of feature

- Returns

Strength

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].strength 0.000677075469866395 >>> orb[:5].strength array([0.0007, 0.0009, 0.0031, 0.0008, 0.0013])

- subset(N=100)

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 100

- Returns

subset of features

- Return type

BaseFeature2Dinstance

Return

Nfeatures selected in constant steps from the input feature vector, ie. feature 0, s, 2s, etc.Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.subset(50) >>> len(orb2) 50

- support(images, N=50)

Find support region

- Parameters

images (

Imageor list ofImage) – the image from which the feature was extractedN (int, optional) – size of square window, defaults to 50

- Returns

support region

- Return type

Imageinstance

The support region about the feature’s centroid is extracted, rotated and scaled.

Example:

Note

If the features come from multiple images then the feature’s

idattribute is used to index intoimageswhich must be a list of Image objects.

- table()

Print features in tabular form

Each row is in the table includes: the index in the feature vector, centroid coordinate, feature strength, feature scale and image id.

- Seealso

str

- property u

Horizontal coordinate of feature point

- Returns

Horizontal coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].u 847.0 >>> orb[:5].u array([847., 717., 647., 37., 965.])

- property v

Vertical coordinate of feature point

- Returns

Vertical coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].v 689.0 >>> orb[:5].v array([689., 707., 690., 373., 691.])

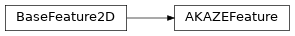

- class machinevisiontoolbox.ImagePointFeatures.AKAZEFeature(kp=None, des=None, scale=False, orient=False, image=None)[source]

Create set of AKAZE point features

Create set of 2D point features

- Parameters

kp (list of N elements, optional) – list of

opencv.KeyPointobjects, one per feature, defaults to Nonedes (ndarray(N,M), optional) – Feature descriptor, each is an M-vector, defaults to None

scale (bool, optional) – features have an inherent scale, defaults to False

orient (bool, optional) – features have an inherent orientation, defaults to False

A

BaseFeature2Dobject:has a length, the number of feature points it contains

can be sliced to extract a subset of features

This object behaves like a list, allowing indexing, slicing and iteration over individual features. It also supports a number of convenience methods.

Note

OpenCV consider feature points as

opencv.KeyPointobjects and the descriptors as a multirow NumPy array. This class provides a more convenient abstraction.- __getitem__(i)

Get item from point feature object (base method)

- Parameters

i (int or slice) – index

- Raises

IndexError – index out of range

- Returns

subset of point features

- Return type

BaseFeature2D instance

This method allows a

BaseFeature2Dobject to be indexed, sliced or iterated.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> print(orb[:5]) # first 5 ORB features ORBFeature features, 5 points >>> print(orb[::50]) # every 50th ORB feature ORBFeature features, 10 points

- Seealso

- __len__()

Number of features (base method)

- Returns

number of features

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500

- Seealso

- property descriptor

Descriptor of feature

- Returns

Descriptor

- Return type

ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].descriptor.shape (32,) >>> orb[0].descriptor array([112, 44, 105, 97, 93, 249, 81, 39, 103, 229, 174, 153, 35, 45, 82, 20, 8, 166, 237, 28, 24, 106, 229, 62, 223, 197, 16, 123, 227, 12, 86, 126], dtype=uint8) >>> orb[:5].descriptor.shape (5, 32)

Note

For single feature return a 1D array vector, for multiple features return a set of column vectors.

- distance(other, metric='L2')

Distance between feature sets

- Parameters

other (

BaseFeature2D) – second set of featuresmetric (str, optional) – feature distance metric, one of “ncc”, “L1”, “L2” [default]

- Returns

distance between features

- Return type

ndarray(N1, N2)

Compute the distance matrix between two sets of feature. If the first set of features has length N1 and the

otheris of length N2, then compute an \(N_1 imes N_2\) matrix where element \(D_{ij}\) is the distance between feature \(i\) in the first set and feature \(j\) in the other set. The position of the closest match in row \(i\) is the best matching feature to feature \(i\).Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> dist = orb1.distance(orb2) >>> dist.shape (500, 500)

Note

The matrix is symmetric.

For the metric “L1” and “L2” the best match is the smallest distance

For the metric “ncc” the best match is the largest distance. A value over 0.8 is often considered to be a good match.

- Seealso

- drawKeypoints(image, drawing=None, isift=None, flags=4, **kwargs)

Render keypoints into image

- Parameters

image (

Image) – original imagedrawing (_type_, optional) – _description_, defaults to None

isift (_type_, optional) – _description_, defaults to None

flags (_type_, optional) – _description_, defaults to cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

- Returns

image with rendered keypoints

- Return type

Imageinstance

If

imageis None then the keypoints are rendered over a black background.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- filter(**kwargs)

Filter features

- Parameters

kwargs – the filter parameters

- Returns

sorted features

- Return type

BaseFeature2Dinstance

The filter is defined by arguments:

argument

value

select if

scale

(minimum, maximum)

minimum <= scale <= maximum

minscale

minimum

minimum <= scale

maxscale

maximum

scale <= maximum

strength

(minimum, maximum)

minimum <= strength <= maximum

minstrength

minimum

minimum <= strength

percentstrength

percent

strength >= percent * max(strength)

nstrongest

N

strength

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.filter(minstrength=0.001) >>> len(orb2) 407

Note

If

valueis a range thenumpy.Infor-numpy.Infcan be used as values.

- gridify(nbins, nfeat)

Sort features into grid

- Parameters

nfeat (int) – maximum number of features per grid cell

nbins (int) – number of grid cells horizontally and vertically

- Returns

set of gridded features

- Return type

BaseFeature2Dinstance

Select features such that no more than

nfeatfeatures fall into each grid cell. The image is divided into annbinsxnbinsgrid.Warning

Takes the first

nfeatfeatures in each grid cell, not thenfeatstrongest. Sort the features by strength to achieve this.- Seealso

- property id

Image id for feature point

- Returns

image id

- Return type

int or list of int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].id -1 >>> orb[:5].id array([-1, -1, -1, -1, -1])

Note

Defined by the

idattribute of the image passed to the feature detector

- match(other, ratio=0.75, crosscheck=False, metric='L2', sort=True, top=None, thresh=None)

Match point features

- Parameters

other (BaseFeature2D) – set of feature points

ratio (float, optional) – parameter for Lowe’s ratio test, defaults to 0.75

crosscheck (bool, optional) – perform left-right cross check, defaults to False

metric (str, optional) – distance metric, one of: ‘L1’, ‘L2’ [default], ‘hamming’, ‘hamming2’

sort (bool, optional) – sort features by strength, defaults to True

- Raises

ValueError – bad metric name provided

- Returns

set of candidate matches

- Return type

FeatureMatchinstance

Return a match object that contains pairs of putative corresponding points. If

crosscheckis True the ratio test is disabledExample:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> m = orb1.match(orb2) >>> len(m) 39

- Seealso

- property octave

Octave of feature

- Returns

scale space octave containing the feature

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].octave 0 >>> orb[:5].octave array([0, 0, 0, 0, 0])

- property orientation

Orientation of feature

- Returns

Orientation in radians

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].orientation 0.9509157556747451 >>> orb[:5].orientation array([0.9509, 1.4389, 1.3356, 5.0882, 1.5255])

- property p

Feature coordinates

- Returns

Feature centroids as matrix columns

- Return type

ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- plot(*args, ax=None, filled=False, color='blue', alpha=1, hand=False, handcolor='blue', handthickness=1, handalpha=1, **kwargs)

Plot features using Matplotlib

- Parameters

ax (axes, optional) – axes to plot onto, defaults to None

filled (bool, optional) – shapes are filled, defaults to False

hand (bool, optional) – draw clock hand to indicate orientation, defaults to False

handcolor (str, optional) – color of clock hand, defaults to ‘blue’

handthickness (int, optional) – thickness of clock hand in pixels, defaults to 1

handalpha (int, optional) – transparency of clock hand, defaults to 1

kwargs (dict) – options passed to

matplotlib.Circlesuch as color, alpha, edgecolor, etc.

Plot circles to represent the position and scale of features on a Matplotlib axis. Orientation, if applicable, is indicated by a radial line from the circle centre to the circumference, like a clock hand.

- property scale

Scale of feature

- Returns

Scale

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].scale 31.0 >>> orb[:5].scale array([31., 31., 31., 31., 31.])

- sort(by='strength', descending=True, inplace=False)

Sort features

- Parameters

by (str, optional) – sort by

'strength'[default] or'scale'descending (bool, optional) – sort in descending order, defaults to True

- Returns

sorted features

- Return type

BaseFeature2Dinstance

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> orb2 = orb.sort('strength') >>> orb2[:5].strength array([0.0031, 0.003 , 0.0029, 0.0027, 0.0025])

- property strength

Strength of feature

- Returns

Strength

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].strength 0.000677075469866395 >>> orb[:5].strength array([0.0007, 0.0009, 0.0031, 0.0008, 0.0013])

- subset(N=100)

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 100

- Returns

subset of features

- Return type

BaseFeature2Dinstance

Return

Nfeatures selected in constant steps from the input feature vector, ie. feature 0, s, 2s, etc.Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.subset(50) >>> len(orb2) 50

- support(images, N=50)

Find support region

- Parameters

images (

Imageor list ofImage) – the image from which the feature was extractedN (int, optional) – size of square window, defaults to 50

- Returns

support region

- Return type

Imageinstance

The support region about the feature’s centroid is extracted, rotated and scaled.

Example:

Note

If the features come from multiple images then the feature’s

idattribute is used to index intoimageswhich must be a list of Image objects.

- table()

Print features in tabular form

Each row is in the table includes: the index in the feature vector, centroid coordinate, feature strength, feature scale and image id.

- Seealso

str

- property u

Horizontal coordinate of feature point

- Returns

Horizontal coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].u 847.0 >>> orb[:5].u array([847., 717., 647., 37., 965.])

- property v

Vertical coordinate of feature point

- Returns

Vertical coordinate

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].v 689.0 >>> orb[:5].v array([689., 707., 690., 373., 691.])

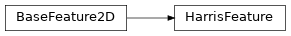

- class machinevisiontoolbox.ImagePointFeatures.HarrisFeature(kp=None, des=None, scale=False, orient=False, image=None)[source]

Create set of Harris corner features

Create set of 2D point features

- Parameters

kp (list of N elements, optional) – list of

opencv.KeyPointobjects, one per feature, defaults to Nonedes (ndarray(N,M), optional) – Feature descriptor, each is an M-vector, defaults to None

scale (bool, optional) – features have an inherent scale, defaults to False

orient (bool, optional) – features have an inherent orientation, defaults to False

A

BaseFeature2Dobject:has a length, the number of feature points it contains

can be sliced to extract a subset of features

This object behaves like a list, allowing indexing, slicing and iteration over individual features. It also supports a number of convenience methods.

Note

OpenCV consider feature points as

opencv.KeyPointobjects and the descriptors as a multirow NumPy array. This class provides a more convenient abstraction.- __getitem__(i)

Get item from point feature object (base method)

- Parameters

i (int or slice) – index

- Raises

IndexError – index out of range

- Returns

subset of point features

- Return type

BaseFeature2D instance

This method allows a

BaseFeature2Dobject to be indexed, sliced or iterated.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> print(orb[:5]) # first 5 ORB features ORBFeature features, 5 points >>> print(orb[::50]) # every 50th ORB feature ORBFeature features, 10 points

- Seealso

- __len__()

Number of features (base method)

- Returns

number of features

- Return type

int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> len(orb) # number of features 500

- Seealso

- property descriptor

Descriptor of feature

- Returns

Descriptor

- Return type

ndarray(N,M)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].descriptor.shape (32,) >>> orb[0].descriptor array([112, 44, 105, 97, 93, 249, 81, 39, 103, 229, 174, 153, 35, 45, 82, 20, 8, 166, 237, 28, 24, 106, 229, 62, 223, 197, 16, 123, 227, 12, 86, 126], dtype=uint8) >>> orb[:5].descriptor.shape (5, 32)

Note

For single feature return a 1D array vector, for multiple features return a set of column vectors.

- distance(other, metric='L2')

Distance between feature sets

- Parameters

other (

BaseFeature2D) – second set of featuresmetric (str, optional) – feature distance metric, one of “ncc”, “L1”, “L2” [default]

- Returns

distance between features

- Return type

ndarray(N1, N2)

Compute the distance matrix between two sets of feature. If the first set of features has length N1 and the

otheris of length N2, then compute an \(N_1 imes N_2\) matrix where element \(D_{ij}\) is the distance between feature \(i\) in the first set and feature \(j\) in the other set. The position of the closest match in row \(i\) is the best matching feature to feature \(i\).Example:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> dist = orb1.distance(orb2) >>> dist.shape (500, 500)

Note

The matrix is symmetric.

For the metric “L1” and “L2” the best match is the smallest distance

For the metric “ncc” the best match is the largest distance. A value over 0.8 is often considered to be a good match.

- Seealso

- drawKeypoints(image, drawing=None, isift=None, flags=4, **kwargs)

Render keypoints into image

- Parameters

image (

Image) – original imagedrawing (_type_, optional) – _description_, defaults to None

isift (_type_, optional) – _description_, defaults to None

flags (_type_, optional) – _description_, defaults to cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS

- Returns

image with rendered keypoints

- Return type

Imageinstance

If

imageis None then the keypoints are rendered over a black background.Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- filter(**kwargs)

Filter features

- Parameters

kwargs – the filter parameters

- Returns

sorted features

- Return type

BaseFeature2Dinstance

The filter is defined by arguments:

argument

value

select if

scale

(minimum, maximum)

minimum <= scale <= maximum

minscale

minimum

minimum <= scale

maxscale

maximum

scale <= maximum

strength

(minimum, maximum)

minimum <= strength <= maximum

minstrength

minimum

minimum <= strength

percentstrength

percent

strength >= percent * max(strength)

nstrongest

N

strength

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> len(orb) 500 >>> orb2 = orb.filter(minstrength=0.001) >>> len(orb2) 407

Note

If

valueis a range thenumpy.Infor-numpy.Infcan be used as values.

- gridify(nbins, nfeat)

Sort features into grid

- Parameters

nfeat (int) – maximum number of features per grid cell

nbins (int) – number of grid cells horizontally and vertically

- Returns

set of gridded features

- Return type

BaseFeature2Dinstance

Select features such that no more than

nfeatfeatures fall into each grid cell. The image is divided into annbinsxnbinsgrid.Warning

Takes the first

nfeatfeatures in each grid cell, not thenfeatstrongest. Sort the features by strength to achieve this.- Seealso

- property id

Image id for feature point

- Returns

image id

- Return type

int or list of int

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].id -1 >>> orb[:5].id array([-1, -1, -1, -1, -1])

Note

Defined by the

idattribute of the image passed to the feature detector

- match(other, ratio=0.75, crosscheck=False, metric='L2', sort=True, top=None, thresh=None)

Match point features

- Parameters

other (BaseFeature2D) – set of feature points

ratio (float, optional) – parameter for Lowe’s ratio test, defaults to 0.75

crosscheck (bool, optional) – perform left-right cross check, defaults to False

metric (str, optional) – distance metric, one of: ‘L1’, ‘L2’ [default], ‘hamming’, ‘hamming2’

sort (bool, optional) – sort features by strength, defaults to True

- Raises

ValueError – bad metric name provided

- Returns

set of candidate matches

- Return type

FeatureMatchinstance

Return a match object that contains pairs of putative corresponding points. If

crosscheckis True the ratio test is disabledExample:

>>> from machinevisiontoolbox import Image >>> orb1 = Image.Read("eiffel-1.png").ORB() >>> orb2 = Image.Read("eiffel-2.png").ORB() >>> m = orb1.match(orb2) >>> len(m) 39

- Seealso

- property octave

Octave of feature

- Returns

scale space octave containing the feature

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].octave 0 >>> orb[:5].octave array([0, 0, 0, 0, 0])

- property orientation

Orientation of feature

- Returns

Orientation in radians

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].orientation 0.9509157556747451 >>> orb[:5].orientation array([0.9509, 1.4389, 1.3356, 5.0882, 1.5255])

- property p

Feature coordinates

- Returns

Feature centroids as matrix columns

- Return type

ndarray(2,N)

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].p array([[847.], [689.]]) >>> orb[:5].p array([[847., 717., 647., 37., 965.], [689., 707., 690., 373., 691.]])

- plot(*args, ax=None, filled=False, color='blue', alpha=1, hand=False, handcolor='blue', handthickness=1, handalpha=1, **kwargs)

Plot features using Matplotlib

- Parameters

ax (axes, optional) – axes to plot onto, defaults to None

filled (bool, optional) – shapes are filled, defaults to False

hand (bool, optional) – draw clock hand to indicate orientation, defaults to False

handcolor (str, optional) – color of clock hand, defaults to ‘blue’

handthickness (int, optional) – thickness of clock hand in pixels, defaults to 1

handalpha (int, optional) – transparency of clock hand, defaults to 1

kwargs (dict) – options passed to

matplotlib.Circlesuch as color, alpha, edgecolor, etc.

Plot circles to represent the position and scale of features on a Matplotlib axis. Orientation, if applicable, is indicated by a radial line from the circle centre to the circumference, like a clock hand.

- property scale

Scale of feature

- Returns

Scale

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].scale 31.0 >>> orb[:5].scale array([31., 31., 31., 31., 31.])

- sort(by='strength', descending=True, inplace=False)

Sort features

- Parameters

by (str, optional) – sort by

'strength'[default] or'scale'descending (bool, optional) – sort in descending order, defaults to True

- Returns

sorted features

- Return type

BaseFeature2Dinstance

Example:

>>> from machinevisiontoolbox import Image >>> orb = Image.Read("eiffel-1.png").ORB() >>> orb2 = orb.sort('strength') >>> orb2[:5].strength array([0.0031, 0.003 , 0.0029, 0.0027, 0.0025])

- property strength

Strength of feature

- Returns

Strength

- Return type

float or list of float

Example:

>>> from machinevisiontoolbox import Image >>> img = Image.Read("eiffel-1.png") >>> orb = img.ORB() >>> orb[0].strength 0.000677075469866395 >>> orb[:5].strength array([0.0007, 0.0009, 0.0031, 0.0008, 0.0013])

- subset(N=100)

Select subset of features

- Parameters

N (int, optional) – the number of features to select, defaults to 100

- Returns

subset of features