Central projective camera

Model the geometry of a central projective camera.

- class machinevisiontoolbox.Camera.CentralCamera(f=1, distortion=None, **kwargs)[source]

Create central camera projection model

- Parameters

f (float, optional) – focal length, defaults to 8mm

distortion (array_like(5), optional) – camera distortion parameters, defaults to None

kwargs – arguments passed to

CameraBaseconstructor

A camera object contains methods for projecting 3D points and lines to the image plane, as well as supporting a virtual image plane onto which 3D points and lines can be drawn.

- References

Robotics, Vision & Control for Python, Section 13.1, P. Corke, Springer 2023.

- Seealso

- classmethod Default(**kwargs)[source]

Set default central camera parameters

- Returns

central camera model

- Return type

CentralCamerainstance

Initialize a central camera with: focal length of 8mm, \(10\mu\) with principal point at (500, 500).

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera Name: camera1 [CentralCamera] pixel size: 1e-05 x 1e-05 image size: 1000 x 1000 pose: t = 0, 0, 0; rpy/yxz = 0°, 0°, 0° principal pt: [500. 500.] focal length: [0.008 0.008]

- References

Robotics, Vision & Control for Python, Section 13.1, P. Corke, Springer 2023.

- Seealso

- project_point(P, pose=None, objpose=None, behind=True, visibility=False, retinal=False, **kwargs)[source]

Project 3D points to image plane

- Parameters

P (array_like(3), array_like(3,n), array_like(4), array_like(4,n)) – 3D world point or points in Euclidean or homogeneous form

pose (

SE3, optional) – camera pose with respect to the world frame, defaults to camera’sposeattributeobjpose (

SE3, optional) – 3D point reference frame, defaults to world framebehind (bool, optional) – points behind the camera indicated by NaN, defaults to True

visibility (bool) – return visibility array, defaults to False

retinal (bool, optional) – transform to retinal coordinates, defaults to False

- Returns

image plane points, optional visibility vector

- Return type

ndarray(2,n), ndarray(n)

Project a 3D point to the image plane

\[\hvec{p} = \mat{C} \hvec{P}\]where \(\mat{C}\) is the camera calibration matrix and \(\hvec{p}\) and \(\hvec{P}\) are the image plane and world frame coordinates respectively, in homogeneous form.

World points are given as a 1D array or the columns of a 2D array of Euclidean or homogeneous coordinates. The computed image plane coordinates are Euclidean or homogeneous and given as a 1D array or the corresponding columns of a 2D array.

If

poseis specified it is used for the camera frame pose, otherwise the attributeposeis used. The object’sposeattribute is not updated ifposeis specified.A single point can be specified as a 3-vector, multiple points as an array with three rows and each column is the 3D point coordinate (X, Y, Z).

The points

Pare by default with respect to the world frame, but they can be transformed by specifyingobjpose.If world points are behind the camera and

behindis True then the image plane coordinates are set to NaN.if

visibilityis True then each projected point is checked to ensure it lies in the bounds of the image plane. In this case there are two return values: the image plane coordinates and an array of booleans indicating if the corresponding point is visible.If

retinalis True then project points in retinal coordinates, in units of metres with respect to the principal point.Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default() >>> camera.project_point((0.3, 0.4, 2)) array([[620.], [660.]])

- References

Robotics, Vision & Control for Python, Section 13.1, P. Corke, Springer 2023.

- Seealso

- project_line(lines)[source]

Project 3D lines to image plane

- Parameters

lines (

Line3instance with N values) – Plucker line or lines- Returns

2D homogeneous lines, one per column

- Return type

ndarray(3,N)

The

Line3object can contain multiple lines. The result array has one column per line, and each column is a vector describing the image plane line in homogeneous form \(\ell_0 u + \ell_1 v + \ell_2 = 0\).The projection is

\[\ell = \vex{\mat{C} \sk{\vec{L}} \mat{C}^{\top}}\]where \(\mat{C}\) is the camera calibration matrix and \(\sk{\vec{L}}\) is the skew matrix representation of the Plucker line.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import Line3 >>> line = Line3.Join((-3, -4, 5), (5, 2, 6)) >>> line Plucker([-34, 43, 14, -8, -6, -1]) >>> camera = CentralCamera() >>> camera.project_line(line) array([[ 0.7907], [-1. ], [-0.3256]])

- References

Robotics, Vision & Control for Python, Section 13.7.1, P. Corke, Springer 2023.

- Seealso

CLine3project_pointproject_quadric

- project_quadric(Q)[source]

Project 3D quadric to image plane

- Parameters

Q (ndarray(4,4)) – quadric matrix

- Returns

image plane conic

- Return type

ndarray(3,3)

Quadrics, short for quadratic surfaces, are a rich family of 3-dimensional surfaces. There are 17 standard types including spheres, ellipsoids, hyper- boloids, paraboloids, cylinders and cones all described by points \(\vec{x} \in \mathbb{P}^3\) such that

\[\hvec{x}^{\top} \mat{Q} \hvec{x} = 0\]The outline of the quadric is projected to a conic section on the image plane

\[c^* = \mat{C} \mat{Q}^* \mat{C}^{\top}\]where \((\mat{X})^* = det(\mat{X}) \mat{X}^{-1}\) is the adjugate operator.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> T_cam = SE3.Trans(0.2, 0.1, -5) * SE3.Rx(0.2) >>> Q = np.diag([1, 1, 1, -1]) # unit sphere at origin >>> camera = CentralCamera.Default(f=0.015, pose=T_cam); >>> camera.project_quadric(Q) array([[ -2166031.6261, -7965097.3688, -10128.1232], [ -7965097.3688, -11963644.9528, -18212.0607], [ -10128.1232, -18212.0607, -23.2084]])

- References

Robotics, Vision & Control for Python, Section 13.7.1, P. Corke, Springer 2023.

- Seealso

- epiline(p, camera2)[source]

Compute epipolar line

- Parameters

p (array_like(2) or ndarray(2,N)) – image plane point or points

camera2 (

CentralCamerainstance) – second camera

- Returns

epipolar line or lines in homogeneous form

- Return type

ndarray(3), ndarray(3,N)

Compute the epipolar line in

camera2induced by the image plane pointspin the current camera. Each line is given by\[\ell = \mat{F} {}^1 \hvec{p}\]which is in homogeneous form \(\ell_0 u + \ell_1 v + \ell_2 = 0\) and the conjugate point \({}^2 \vec{p}\) lies on this line.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera1 = CentralCamera.Default(name='camera1') >>> camera2 = CentralCamera.Default(pose=SE3(0.1, 0.05, 0), name='camera2') >>> P = [-0.2, 0.3, 5] # world point >>> p1 = camera1.project_point(P) # project to first camera >>> camera1.epiline(p1, camera2) # epipolar line in second camera array([[-0.0001], [ 0.0001], [-0.0393]])

- References

Robotics, Vision & Control for Python, Section 14.2.1, P. Corke, Springer 2023.

- Seealso

- plot_epiline(F, p, *fmt, **kwargs)[source]

Plot epipolar line

- Parameters

F (ndarray(3,3)) – fundamental matrix

p (array_like(2) or ndarray(2,N)) – image plane point or points

fmt – line style argument passed to

plotkwargs – additional line style arguments passed to

plot

Plot the epipolar line induced by the image plane points

pin the camera’s virtual image plane. Each line is given by\[\ell = \mat{F} {}^1 \hvec{p}\]which is in homogeneous form \(\ell_0 u + \ell_1 v + \ell_2 = 0\) and the conjugate point \({}^2 \vec{p}\) lies on this line.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera1 = CentralCamera.Default(name='camera1') >>> camera2 = CentralCamera.Default(pose=SE3(0.1, 0.05, 0), name='camera2') >>> P = [-0.2, 0.3, 5] # world point >>> p1 = camera1.project_point(P) # project to first camera >>> camera2.plot_point(P, 'kd') # project and display in second camera array([[452.], [540.]]) >>> camera2.plot_epiline(camera1.F(camera2), p1) # plot epipolar line in second camera

- References

Robotics, Vision & Control for Python, Section 14.2.1, P. Corke, Springer 2023.

- Seealso

- plot_line3(L, **kwargs)[source]

Plot 3D line on virtual image plane (base method)

- Parameters

L (

Line3) – 3D line in Plucker coordinateskwargs – arguments passed to

plot

The Plucker line is projected to the camera’s virtual image plane and plotted.

Note

Successive calls add items to the virtual image plane.

This method is common to all

CameraBasesubclasses, but it invokes a camera-specific projection method.

- Seealso

- ray(points, pose=None)[source]

Project image plane points to a ray

- Parameters

points (ndarray(2,N)) – image plane points

pose (

SE3, optional) – camera pose, defaults to None

- Returns

corresponding Plucker lines

- Return type

Line3

For each image plane point compute the equation of a Plucker line that represents the 3D ray from the camera origin through the image plane point.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default() >>> line = camera.ray((100, 200)) >>> line Plucker([-0, 0, 0, -0.5, -0.375, 1])

- Reference

“Multiview Geometry”, Hartley & Zisserman, p.162

Robotics, Vision & Control for Python, Section 14.3, P. Corke, Springer 2023.

- Seealso

Line3

- property centre

Position of camera frame

- Returns

Euclidean coordinate of the camera frame’s origin

- Return type

ndarray(3)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera1 = CentralCamera.Default(name='camera1', pose=SE3.Trans(1,2,3)) >>> camera1 Name: camera1 [CentralCamera] pixel size: 1e-05 x 1e-05 image size: 1000 x 1000 pose: t = 1, 2, 3; rpy/yxz = 0°, 0°, 0° principal pt: [500. 500.] focal length: [0.008 0.008] >>> camera1.centre array([1., 2., 3.])

- property center

Position of camera frame

- Returns

Euclidean coordinate of the camera frame’s origin

- Return type

ndarray(3)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera1 = CentralCamera.Default(name='camera1', pose=SE3.Trans(1,2,3)) >>> camera1 Name: camera1 [CentralCamera] pixel size: 1e-05 x 1e-05 image size: 1000 x 1000 pose: t = 1, 2, 3; rpy/yxz = 0°, 0°, 0° principal pt: [500. 500.] focal length: [0.008 0.008] >>> camera1.center array([1., 2., 3.])

- fov()[source]

Camera field-of-view angles

- Returns

field of view angles in radians

- Return type

ndarray(2)

Computes the field of view angles (2x1) in radians for the camera horizontal and vertical directions.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera1 = CentralCamera.Default(name='camera1') >>> camera1.fov() array([1.1172, 1.1172])

- References

Robotics, Vision & Control for Python, Section 13.1.4, P. Corke, Springer 2023.

- distort(points)[source]

Compute distorted coordinate

- Parameters

points (ndarray(2,n)) – image plane points

- Returns

distorted image plane coordinates

- Return type

ndarray(2,n)

Compute the image plane coordinates after lens distortion has been applied. The lens distortion model is initialized at constructor time.

- property fu

Get focal length in horizontal direction

- Returns

focal length in horizontal direction

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.fu 0.008

- property fv

Get focal length in vertical direction

- Returns

focal length in vertical direction

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.fv 0.008

- property f

Set/get focal length

- Returns

focal length in horizontal and vertical directions

- Return type

ndarray(2)

Return focal length in horizontal and vertical directions.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.f array([0.008, 0.008]) >>> camera.f = 0.015 >>> camera.f array([0.015, 0.015]) >>> camera.f = [0.015, 0.020] >>> camera.f array([0.015, 0.02 ])

Note

These are normally identical but will differ if the sensor has non-square pixels or the frame grabber is changing the aspect ratio of the image.

- property fpix

Get focal length in pixels

- Returns

focal length in horizontal and vertical directions in pixels

- Return type

ndarray(2)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.fpix array([800., 800.])

- Seealso

- property K

Intrinsic matrix of camera

- Returns

intrinsic matrix

- Return type

ndarray(3,3)

Return the camera intrinsic matrix.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.K array([[800., 0., 500.], [ 0., 800., 500.], [ 0., 0., 1.]])

- C(pose=None, retinal=False)[source]

Camera projection matrix

- Parameters

T (

SE3, optional) – camera pose with respect to world frame, defaults to pose from camera objectretinal (bool, optional) – transform to retinal coordinates, default False

- Returns

camera projection/calibration matrix

- Return type

ndarray(3,4)

Return the camera matrix which projects 3D points to the image plane. It is a function of the camera’s intrinsic and extrinsic parameters.

If

retinalis True then project points in retinal coordinates, in units of metres with respect to the principal point.Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(name='camera1') >>> camera.C() array([[800., 0., 500., 0.], [ 0., 800., 500., 0.], [ 0., 0., 1., 0.]]) >>> camera.C(SE3.Trans(0.1, 0, 0)) array([[800., 0., 500., -80.], [ 0., 800., 500., 0.], [ 0., 0., 1., 0.]]) >>> camera.move(SE3(0.1, 0, 0)).C() array([[800., 0., 500., -80.], [ 0., 800., 500., 0.], [ 0., 0., 1., 0.]])

- References

Robotics, Vision & Control for Python, Section 13.1, P. Corke, Springer 2023.

- Seealso

- static points2C(P, p)[source]

Estimate camera matrix from data points

- Parameters

P (ndarray(3,N)) – calibration points in world coordinate frame

p (ndarray(2,N)) – calibration points in image plane

- Returns

camera calibration matrix and residual

- Return type

ndarray(3,4), float

Estimate the camera matrix \(\mat{C}\) determined by least squares from corresponding world

Pand image-planeppoints. Corresponding points are represented by corresponding columns ofPandp. Also returns the residual which is:\[\max | \mat{C}\mat{P} - \mat{p} |\]Example:

>>> from machinevisiontoolbox import CentralCamera, mkcube >>> P = mkcube(0.2) >>> camera_unknown = CentralCamera(f=0.015, rho=10e-6, imagesize=[1280, 1024], noise=0.05, seed=0) >>> T_unknown = SE3.Trans(0.1, 0.2, 1.5) * SE3.RPY(0.1, 0.2, 0.3) >>> p = camera_unknown.project_point(P, objpose=T_unknown) >>> C, resid = CentralCamera.points2C(P, p) >>> C array([[ 852.8155, -233.0621, 633.5638, 740.0291], [ 222.9807, 990.0484, 294.836 , 711.964 ], [ -0.131 , 0.0655, 0.6488, 1. ]]) >>> camera_unknown.C() array([[1500., 0., 640., 0.], [ 0., 1500., 512., 0.], [ 0., 0., 1., 0.]]) >>> resid 0.03767261717553083

Note

This method assumes no lens distortion affecting the image plane coordinates.

- References

Robotics, Vision & Control for Python, Section 13.2.1, P. Corke, Springer 2023.

- Seealso

- classmethod images2C(images, gridshape=(7, 6), squaresize=0.025)[source]

Calibrate camera from checkerboard images

- Parameters

images (

ImageSource) – an iterator that returnsImageobjectsgridshape (tuple, optional) – number of grid squares in each dimension, defaults to (7,6)

squaresize (float, optional) – size of the grid squares in units of length, defaults to 0.025

- Returns

camera calibration matrix, distortion parameters, image frames

- Return type

ndarray(3,4), ndarray(5), list of named tuples

The distortion coefficients are in the order \((k_1, k_2, p_1, p_2, k_3)\) where \(k_i\) are radial distortion coefficients and \(p_i\) are tangential distortion coefficients.

Image frames that were successfully processed are returned as a list of named tuples

CalibrationFramewith elements:element

type

description

image

Imagecalibration image with overlaid annotation

pose

SE3instancepose of the camera with respect to the origin of this image

id

int

sequence number of this image in

imagesNote

The units used for

squaresizemust match the units used for defining 3D points in space.- References

Robotics, Vision & Control for Python, Section 13.7, P. Corke, Springer 2023.

- Seealso

Cpoints2CdecomposeCSE3

- classmethod decomposeC(C)[source]

Decompose camera calibration matrix

- Parameters

C (ndarray(3,4)) – camera calibration matrix

- Returns

camera model parameters

- Return type

Decompose a \(3\times 4\) camera calibration matrix

Cto determine feasible intrinsic and extrinsic parameters. The result is aCentralCamerainstance with the following parameters set:Parameter

Meaning

ffocal length in pixels

sx,sypixel size where

sx=1(

u0,v0)principal point

posepose of the camera frame wrt world

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera(name='camera1') >>> C = camera.C(SE3(0.1, 0, 0)) >>> CentralCamera.decomposeC(C) Name: invC [CentralCamera] pixel size: 1.0 x 1.0 pose: t = 0.1, 0, 0; rpy/yxz = 0°, 0°, 0° principal pt: [0. 0.] focal length: [1. 1.]

Note

Since only \(f s_x\) and \(f s_y\) can be estimated we set \(s_x = 1\).

- H(T, n, d)[source]

Compute homography from plane and camera pose

- Parameters

T (

SE3) – relative camera motionn (array_like(3)) – plane normal with respect to world frame

d (float) – plane offset from world frame origin

- Returns

homography matrix

- Return type

ndarray(3,3)

Computes the homography matrix for the camera observing points on a plane from two viewpoints. The first view is from the current camera pose (

self.pose), and the second is after a relative motion represented by the rigid-body motionT. The plane has normalnand at distancedwith respect to the world frame.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera.Default(name='camera1') # looking along z-axis >>> plane = [0, 1, 1] >>> H = camera.H(SE3.Tx(0.2), plane, 5) >>> H array([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.]])

- Seealso

- static points2H(p1, p2, method='leastsquares', seed=None, **kwargs)[source]

Estimate homography from corresponding points

- Parameters

p1 (ndarray(2,N)) – image plane points from first camera

p2 (ndarray(2,N)) – image plane points from second camera

method (str) – algorithm: ‘leastsquares’ [default], ‘ransac’, ‘lmeds’, ‘prosac’

kwargs – optional arguments as required for ransac’ and ‘lmeds’ methods

- Returns

homography, residual and optional inliers

- Return type

ndarray(3,3), float, ndarray(N,bool)

Compute a homography from two sets of corresponding image plane points whose world points lie on a plane.

Example:

>>> from machinevisiontoolbox import CentralCamera, mkgrid >>> from spatialmath import SE3 >>> camera1 = CentralCamera(name="camera 1", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(-0.1)*SE3.Ry(0.4)) >>> camera2 = CentralCamera(name="camera 2", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(0.1)*SE3.Ry(-0.4)) >>> T_grid = SE3.Tz(1) * SE3.Rx(0.1) * SE3.Ry(0.2) >>> P = mkgrid(3, 1.0, pose=T_grid) >>> p1 = camera1.project_point(P) >>> p2 = camera2.project_point(P); >>> H, resid = CentralCamera.points2H(p1, p2) >>> H array([[ -0.4187, -0.0004, 397.7657], [ -0.6981, 0.3738, 309.4588], [ -0.0014, -0. , 1. ]]) >>> resid 4.838106817677405e-05

Note

If the method is ‘ransac’ or ‘lmeds’ then a boolean array of inliers is also returned, True means the corresponding input point pair is an inlier.

- Reference

Robotics, Vision & Control for Python, Section 14.2.4, P. Corke, Springer 2023.

- Seealso

- decomposeH(H, K=None)[source]

Decompose homography matrix

- Parameters

H (ndarray(3,3)) – homography matrix

K (ndarray(3,3), optional) – camera intrinsics, defaults to parameters from object

- Returns

camera poses, plane normals

- Return type

SE3, list of ndarray(3,1)

Decomposes the homography matrix into the camera motion and the normal to the plane. In practice, there are multiple solutions. The translation not to scale.

Example:

>>> from machinevisiontoolbox import CentralCamera, mkgrid >>> from spatialmath import SE3 >>> camera1 = CentralCamera(name="camera 1", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(-0.1)*SE3.Ry(0.4)) >>> camera2 = CentralCamera(name="camera 2", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(0.1)*SE3.Ry(-0.4)) >>> T_grid = SE3.Tz(1) * SE3.Rx(0.1) * SE3.Ry(0.2) >>> P = mkgrid(3, 1.0, pose=T_grid) >>> p1 = camera1.project_point(P) >>> p2 = camera2.project_point(P); >>> H, resid = CentralCamera.points2H(p1, p2) >>> T, normals = camera1.decomposeH(H) >>> T.printline(orient="camera") t = -0.185, 0, -0.0783; rpy/yxz = -8.39e-06°, 4.61e-06°, -45.8° t = 0.185, 0, 0.0783; rpy/yxz = -8.39e-06°, 4.61e-06°, -45.8° t = 0.0197, 0.0192, -0.199; rpy/yxz = 1.09°, 0.338°, -34.4° t = -0.0197, -0.0192, 0.199; rpy/yxz = 1.09°, 0.338°, -34.4° >>> normals (array([[ 0.1968], [ 0.0978], [-0.9756]]), array([[-0.1968], [-0.0978], [ 0.9756]]), array([[-0.9552], [-0.01 ], [-0.2958]]), array([[0.9552], [0.01 ], [0.2958]]))

- Reference

Robotics, Vision & Control for Python, Section 14.2.4, P. Corke, Springer 2023.

- Seealso

- F(other)[source]

Fundamental matrix

- Parameters

other (

CentralCamera,SE3) – second camera view- Returns

fundamental matrix

- Return type

numpy(3,3)

Compute the fundamental matrix relating two camera views. The first view is defined by the instance. The second is defined by:

another

CentralCamerainstancean SE3 pose describing the pose of the second view with respect to the first, assuming the same camera intrinsic parameters.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera1 = CentralCamera(name="camera 1", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(-0.1)*SE3.Ry(0.4)) >>> camera2 = CentralCamera(name="camera 2", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(0.1)*SE3.Ry(-0.4)) >>> F = camera1.F(camera2) >>> F array([[ 0. , -0. , 0.001 ], [-0. , 0. , 0.0019], [ 0.001 , 0.0001, -0.9735]]) >>> F = camera1.F(SE3.Tx(0.2)) >>> F array([[ 0. , 0. , 0. ], [ 0. , 0. , 0.001], [ 0. , -0.001, 0. ]])

- static points2F(p1, p2, method='8p', residual=True, seed=None, **kwargs)[source]

Estimate fundamental matrix from corresponding points

- Parameters

p1 (ndarray(2,N)) – image plane points from first camera

p2 (ndarray(2,N)) – image plane points from second camera

method (str, optional) – algorithm ‘7p’, ‘8p’ [default], ‘ransac’, ‘lmeds’

kwargs – optional arguments as required for ransac’, ‘lmeds’ methods

- Returns

fundamental matrix and residual

- Return type

ndarray(3,3), float

Computes the fundamental matrix from two sets of corresponding image-plane points. Corresponding points are given by corresponding columns of

p1andp2.Example:

>>> from machinevisiontoolbox import CentralCamera, mkgrid >>> from spatialmath import SE3 >>> camera1 = CentralCamera(name="camera 1", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(-0.1)*SE3.Ry(0.4)) >>> camera2 = CentralCamera(name="camera 2", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(0.1)*SE3.Ry(-0.4)) >>> T_grid = SE3.Tz(1) * SE3.Rx(0.1) * SE3.Ry(0.2) >>> P = mkgrid(3, 1.0, pose=T_grid) >>> p1 = camera1.project_point(P) >>> p2 = camera2.project_point(P); >>> F, resid = CentralCamera.points2F(p1, p2) >>> F array([[-0.0001, 0.0005, -0.2083], [-0.0006, -0. , 0.2645], [ 0.3044, -0.3069, 1. ]]) >>> resid 2.2567392544778285e-06

- Seealso

- static epidist(F, p1, p2)[source]

Epipolar distance

- Parameters

F (ndarray(3,3)) – fundamental matrix

p1 (ndarray(2) or ndarray(2,N)) – image plane point or points from first camera

p2 (ndarray(2) or ndarray(2,M)) – image plane point or points from second camera

- Returns

distance matrix

- Return type

ndarray(N,M)

Computes the distance of the points

p2from the epipolar lines induced by pointsp1. Element [i,j] of the return value is the istance of point j in camera 2 from the epipolar line induced by point i in camera 1.

- E(other)[source]

Essential matrix from two camera views

- Parameters

other (

CentralCamera,SE3, ndarray(3,3)) – second camera view, camera pose or fundamental matrix- Returns

essential matrix

- Return type

ndarray(3,3)

Compute the essential matrix relating two camera views. The first view is defined by the instance, and second view is specified by:

a camera instance represented by a

CentralCamera. Assumes the cameras have the same intrinsics.a relative motion represented by a

SE3a fundamental matrix

- Reference

Y.Ma, J.Kosecka, S.Soatto, S.Sastry, “An invitation to 3D”, Springer, 2003. p.177

- Seealso

- points2E(p1, p2, method=None, K=None, **kwargs)[source]

Essential matrix from points

- Parameters

P1 (ndarray(2,N)) – image plane points

P2 (ndarray(2,N)) – image plane points

method (str) – method, can be ‘ransac’ or ‘lmeds’

K (ndarray(3,3), optional) – camera intrinsic matrix, defaults to that of camera object

kwargs – additional arguments required for ‘ransac’ or ‘lmeds’ options

- Returns

essential matrix and optional inlier vevtor

- Return type

ndarray(3,3), ndarray(N, bool)

Compute the essential matrix from two sets of corresponding points. Each set of points is represented by the columns of the array

p1orp2.Example:

>>> from machinevisiontoolbox import CentralCamera, mkgrid >>> from spatialmath import SE3 >>> camera1 = CentralCamera(name="camera 1", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(-0.1)*SE3.Ry(0.4)) >>> camera2 = CentralCamera(name="camera 2", f=0.002, imagesize=1000, rho=10e-6, pose=SE3.Tx(0.1)*SE3.Ry(-0.4)) >>> T_grid = SE3.Tz(1) * SE3.Rx(0.1) * SE3.Ry(0.2) >>> P = mkgrid(3, 1.0, pose=T_grid) >>> p1 = camera1.project_point(P) >>> p2 = camera2.project_point(P); >>> E, inliers = camera1.points2E(p1, p2) >>> E array([[-0. , 0.2754, 0. ], [ 0.2754, -0. , -0.6513], [-0. , 0.6513, -0. ]]) >>> inliers array([ True, True, True, True, True, True, True, True, True])

Note

If the method is ‘ransac’ or ‘lmeds’ then a boolean array of inliers is also returned, True means the corresponding input point pair is an inlier.

- Seealso

- decomposeE(E, P=None)[source]

Decompose essential matrix

- Parameters

E (ndarray(3,3)) – essential matrix

P (array_like(3),

FeatureMatch) – world point or feature match object to resolve ambiguity

- Returns

camera relative pose

- Return type

SE3

Determines relative pose from essential matrix. This operation has multiple solutions which is resolved by passing in:

a single 3D world point in front of the camera

a

FeatureMatchobject

- Reference

OpenCV:

- visjac_p(uv, depth)[source]

Visual Jacobian for point features

- Parameters

p (array_like(2), ndarray(2,N)) – image plane point or points

depth (float, array_like(N)) – point depth

- Returns

visual Jacobian matrix

- Return type

ndarray(2,6), ndarray(2N,6)

Compute the image Jacobian \(\mat{J}\) which maps

\[\dvec{p} = \mat{J}(\vec{p}, z) \vec{\nu}\]camera spatial velocity \(\vec{\nu}\) to the image plane velocity \(\dvec{p}\) of the point.

If

pdescribes multiple points then return a stack of these \(2\times 6\) matrices, one per point.Depth is the z-component of the point’s coordinate in the camera frame. If

depthis a scalar then it is the depth for all points.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera.Default() >>> camera.visjac_p((200, 300), 2) array([[-400. , 0. , -150. , 75. , -912.5, -200. ], [ 0. , -400. , -100. , 850. , -75. , 300. ]])

- References

A tutorial on Visual Servo Control, Hutchinson, Hager & Corke, IEEE Trans. R&A, Vol 12(5), Oct, 1996, pp 651-670.

Robotics, Vision & Control for Python, Section 15.2.1, P. Corke, Springer 2023.

- Seealso

- visjac_p_polar(p, Z)[source]

Visual Jacobian for point features in polar coordinates

- Parameters

p (array_like(2), ndarray(2,N)) – image plane point or points

depth (float, array_like(N)) – point depth

- Returns

visual Jacobian matrix in polar coordinates

- Return type

ndarray(2,6), ndarray(2N,6)

Compute the image Jacobian \(\mat{J}\) which maps

\[\begin{split}\begin{pmatrix} \dot{\phi} \\ \dot{r} \end{pmatrix} = \mat{J}(\vec{p}, z) \vec{\nu}\end{split}\]camera spatial velocity \(\vec{\nu}\) to the image plane velocity of the point expressed in polar coordinate form \((\phi, r)\).

If

pdescribes multiple points then return a stack of these \(2\times 6\) matrices, one per point.Depth is the z-component of the point’s coordinate in the camera frame. If

depthis a scalar then it is the depth for all points.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera.Default() >>> camera.visjac_p_polar((200, 300), 2) array([[ 0.0015, 0.0008, 0. , -0.0016, 0.0029, 1. ], [ 0.2436, -0.4366, -150. , 78597.63 , 43847.3779, 0. ]])

- visjac_l(lines, plane)[source]

Visual Jacobian for line features

- Parameters

lines – image plane line parameters

plane (array_like(4)) – plane containing the line

- Returns

visual Jacobian matrix for line feature

- Return type

ndarray(2,6), ndarray(2N,6)

Compute the Jacobian which gives the rates of change of the line parameters in terms of camera spatial velocity.

For image planes lines

\[u \cos \theta + v \sin \theta = \rho\]the image Jacobian \(\mat{J}\) maps

\[\begin{split}\begin{pmatrix} \dot{\theta} \\ \dot{\rho} \end{pmatrix} = \mat{J}(\vec{p}, z) \vec{\nu}\end{split}\]camera spatial velocity \(\vec{\nu}\) to the image plane velocity of the line parameters \((\theta, \rho)\).

The world plane containing the line is also required, and is provided as a vector \((a,b,c,d)\) such that

If

linesdescribes multiple points then return a stack of these \(2\times 6\) matrices, one per point.Depth is the z-component of the point’s coordinate in the camera frame. If

depthis a scalar then it is the depth for all points.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera.Default() >>> camera.visjac_l((0.2, 500), (0, 0, 1, -3)) array([[ -0. , -0. , 0. , -490.0333, -99.3347, -1. ], [ -0.3267, -0.0662, 166.6667, 49667.5314, -245017.6245, 0. ]])

- References

A New Approach to Visual Servoing in Robotics, B. Espiau, F. Chaumette, and P. Rives, IEEE Transactions on Robotics and Automation, vol. 8, pp. 313-326, June 1992.

Visual servo control 2: Advanced approaches Chaumette F, Hutchinson S, IEEE Robot Autom Mag 14(1):109–118 (2007)

Robotics, Vision & Control for Python, Section 15.3.1, P. Corke, Springer 2023.

- Seealso

- visjac_e(E, plane)[source]

Visual Jacobian for ellipse features

- Parameters

E (array_like(5), ndarray(5,N)) – image plane ellipse parameters

plane (array_like(4)) – plane containing the ellipse

- Returns

visual Jacobian matrix for ellipse feature

- Return type

ndarray(2,6), ndarray(2N,6)

Compute the Jacobian gives the rates of change of the ellipse parameters in terms of camera spatial velocity.

For image plane ellipses

\[u^2 + E_0 v^2 -2 E_1 u v + 2 E_2 u + 2 E_3 v + E_4 = 0\]the image Jacobian \(\mat{J}\) maps

\[\begin{split}\begin{pmatrix} \dot{E_0} \\ \vdots \\ \dot{E_4} \end{pmatrix} = \mat{J}(\vec{p}, z) \vec{\nu}\end{split}\]camera spatial velocity \(\vec{\nu}\) to the velocity of the ellipse parameters \((E_0 \ldots E_4)\).

The world plane containing the ellipse is also required, and is provided as a vector \((a,b,c,d)\) such that

Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera.Default() >>> camera.visjac_e(((0.5, 0, -1000, -500, 374900)), (0, 0, 1, -1) ...

- References

A New Approach to Visual Servoing in Robotics, B. Espiau, F. Chaumette, and P. Rives, IEEE Transactions on Robotics and Automation, vol. 8, pp. 313-326, June 1992.

Visual servo control 2: Advanced approaches Chaumette F, Hutchinson S, IEEE Robot Autom Mag 14(1):109–118 (2007)

Robotics, Vision & Control for Python, Section 15.3.2, P. Corke, Springer 2023.

- Seealso

- property camtype

Set/get camera type (base method)

A camera has a string-valued type that can be read and written. This is unique to the camera subclass and projection model.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera(); >>> camera.camtype 'perspective' >>> camera.camtype = "foo" >>> camera.camtype 'foo'

- clf()

Clear the virtual image plane (base method)

Every camera object has a virtual image plane drawn using Matplotlib. Remove all points and lines from the image plane.

- Seealso

plot_pointplot_linedisp

- disp(im, **kwargs)

Display image on virtual image plane (base method)

- Parameters

im (

Imageinstance) – image to displaykwargs – options to

idisp

An image is displayed on camera’s the virtual image plane.

- Seealso

machinevisiontoolbox.base.idisp

- flowfield(vel, Z=2)[source]

Display optical flow field

- Parameters

vel (array_like(6)) – camera spatial velocity

Z (scalar, optional) – _description_, defaults to 2

Display the optical flow field using Matplotlib, for a grid of points at distance

Zfor a camera velocity ofvel.Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default() >>> camera.flowfield([0, 0, 0, 0, 1, 0])

- Seealso

- property height

Get image plane height (base method)

- Returns

height

- Return type

int

Image plane width, number of pixels in the u-direction

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.height 1000

- property imagesize

Set/get size of virtual image plane (base method)

The dimensions of the virtual image plane is a 2-tuple, width and height, that can be read or written. For writing the size must be an iterable of length 2.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.imagesize array([1000, 1000]) >>> camera.imagesize = (500, 500) >>> camera.imagesize array([500, 500])

Note

If the principal point is not set, then setting imagesize sets the principal point to the centre of the image plane.

- move(T, name=None, relative=False)

Move camera (base method)

- Parameters

T (

SE3) – pose of camera framerelative (bool, optional) – move relative to pose of original camera, defaults to False

- Returns

new camera object

- Return type

CameraBasesubclass

Returns a copy of the camera object with pose set to

T.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera(); >>> camera.move(SE3.Trans(0.1, 0.2, 0.3)) Name: perspective-moved [CentralCamera] pixel size: 1.0 x 1.0 pose: t = 0.1, 0.2, 0.3; rpy/yxz = 0°, 0°, 0° principal pt: [0. 0.] focal length: [1. 1.] >>> camera Name: perspective [CentralCamera] pixel size: 1.0 x 1.0 pose: t = 0, 0, 0; rpy/yxz = 0°, 0°, 0° principal pt: [0. 0.] focal length: [1. 1.]

Note

The

plotmethod of this cloned camera will create a new window.- Seealso

- property name

Set/get camera name (base method)

A camera has a string-valued name that can be read and written.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera(); >>> camera.name 'perspective' >>> camera.name = "foo" >>> camera.name 'foo'

- property noise

Set/Get projection noise (base method)

- Returns

standard deviation of noise added to projected image plane points

- Return type

float

The noise parameter is set by the object constructor.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.project_point([0, 0, 3]) array([[500.], [500.]]) >>> camera.noise = 2 >>> camera.project_point([0, 0, 2]) array([[501.1406], [499.1372]]) >>> camera.project_point([0, 0, 2]) array([[497.2934], [501.8915]])

- Seealso

project

- property nu

Get image plane width (base method)

- Returns

width

- Return type

int

Number of pixels in the u-direction (width)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.nu 1000

- property nv

Get image plane height (base method)

- Returns

height

- Return type

int

Number of pixels in the v-direction (height)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.nv 1000

- plot(pose=None, scale=1, shape='camera', label=True, alpha=1, solid=False, color='r', projection='ortho', frame=False, ax=None)

Plot 3D camera icon in world view (base method)

- Parameters

pose (

SE3) – camera posescale (float) – scale factor, defaults to 1

shape (str, optional) – icon shape: ‘frustum’ [default], ‘camera’

label (bool, optional) – show camera name, defaults to True

alpha (float, optional) – transparency of icon, defaults to 1

solid (bool, optional) – icon comprises solid faces, defaults to False

color (str, optional) – icon color, defaults to ‘r’

projection (str, optional) – projection model for new axes, defaults to ‘ortho’

ax (

Axes3D, optional) – axes to draw in, defaults to current 3D axes

- Returns

axes drawn into

- Return type

Axes3D

Plot a 3D icon representing the pose of a camera into a 3D Matplotlib plot. Two icons are supported: the traditional frustum, and a simplistic camera comprising a box and cylinder.

Note

If

poseis not given it defaults to the pose of the instance.

- plot_line2(l, *args, **kwargs)

Plot 2D line on virtual image plane (base method)

- Parameters

l (array_like(3)) – homogeneous line

kwargs – arguments passed to

plot

Plot the homogeneous line on the camera’s virtual image plane. The line is expressed in the form

\[\ell_0 u + \ell_1 v + \ell_2 = 0\]Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default() >>> camera.plot_line2([1, 0.2, -500])

Note

Successive calls add items to the virtual image plane.

This method is common to all

CameraBasesubclasses, but it invokes a camera-specific projection method.

- Seealso

- plot_point(P, *fmt, return_artist=False, objpose=None, pose=None, ax=None, **kwargs)

Plot points on virtual image plane (base method)

- Parameters

P (ndarray(3,), ndarray(3,N), or ndarray(2,), ndarray(2,N)) – 3D world point or points, or 2D image plane point or points

objpose (

SE3, optional) – transformation for the 3D points, defaults to Nonepose (

SE3, optional) – pose of the camera, defaults to Noneax (

matplotlib.axes) – axes to plot intokwargs – additional arguments passed to

plot

- Returns

Matplotlib line objects

- Return type

list of

Line2d

3D world points are first projected to the image plane and then plotted on the camera’s virtual image plane. Points are organized as columns of the arrays.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default() >>> camera.plot_point([0.2, 0.3, 2]) >>> camera.plot_point([0.2, 0.3, 2], 'r*') >>> camera.plot_point([0.2, 0.3, 2], pose=SE3(0.1, 0, 0))

Note

Successive calls add items to the virtual image plane.

This method is common to all

CameraBasesubclasses, but it invokes a camera-specific projection method.

- Seealso

- plot_wireframe(X, Y, Z, *fmt, objpose=None, pose=None, nsteps=21, **kwargs)

Plot 3D wireframe in virtual image plane (base method)

- Parameters

X (ndarray(N,M)) – world X coordinates

Y (ndarray(N,M)) – world Y coordinates

Z (ndarray(N,M)) – world Z coordinates

objpose (

SE3, optional) – transformation for the wireframe points, defaults to Nonepose (

SE3, optional) – pose of the camera, defaults to Nonensteps (int, optional) – number of points for each wireframe segment, defaults to 21

kwargs – arguments passed to

plot

The 3D wireframe is projected to the camera’s virtual image plane. Each wire link in the wireframe is approximated by

nstepspoints, each of which is projected, allowing straight edges to appear curved.Example:

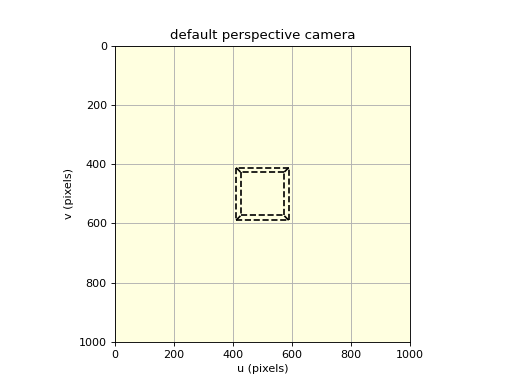

>>> from machinevisiontoolbox import CentralCamera, mkcube >>> from spatialmath import SE3 >>> camera = CentralCamera.Default() >>> X, Y, Z = mkcube(0.2, pose=SE3(0, 0, 1), edge=True) >>> camera.plot_wireframe(X, Y, Z, 'k--')

(Source code, png, hires.png, pdf)

- Seealso

mkcubespatialmath.base.cylinderspatialmath.base.spherespatialmath.base.cuboid

- property pose

Set/get camera pose (base method)

The camera pose with respect to the global frame can be read or written as an

SE3instance.Example:

>>> from machinevisiontoolbox import CentralCamera >>> from spatialmath import SE3 >>> camera = CentralCamera(); >>> camera.pose SE3(array([[1., 0., 0., 0.], [0., 1., 0., 0.], [0., 0., 1., 0.], [0., 0., 0., 1.]])) >>> camera.pose = SE3.Trans(1, 2, 3) >>> camera Name: perspective [CentralCamera] pixel size: 1.0 x 1.0 pose: t = 1, 2, 3; rpy/yxz = 0°, 0°, 0° principal pt: [0. 0.] focal length: [1. 1.]

Note

Changes the pose of the current camera instance, whereas

moveclones the camera instance with a new pose.- Seealso

- property pp

Set/get principal point coordinate (base method)

The principal point is the coordinate of the point where the optical axis pierces the image plane. It is a 2-tuple which can be read or written. For writing the size must be an iterable of length 2.

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.pp array([500., 500.])

- reset()

Reset camera pose (base method)

Restore camera to a copy of the pose given to the constructor. The copy means that the camera pose can be modified freely, without destroying the initial pose value.

- property rho

Get pixel dimensions (base method)

- Returns

horizontal pixel size

- Return type

ndarray(2)

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera(); >>> camera.rhov 1.0

- property rhou

Get pixel width (base method)

- Returns

horizontal pixel size

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.rhou 1e-05

- property rhov

Get pixel width (base method)

- Returns

vertical pixel size

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.rhov 1e-05

- property u0

Get principal point: horizontal coordinate (base method)

- Returns

horizontal component of principal point

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera(); >>> camera.u0 0.0

- property v0

Get principal point: vertical coordinate (base method)

- Returns

vertical component of principal point

- Return type

float

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera(); >>> camera.v0 0.0

- property width

Get image plane width (base method)

- Returns

width

- Return type

int

Image plane height, number of pixels in the v-direction

Example:

>>> from machinevisiontoolbox import CentralCamera >>> camera = CentralCamera.Default(); >>> camera.width 1000

- derivatives(x, P)[source]

Compute projection and derivatives for bundle adjustment

- Parameters

x (array_like(6)) – camera pose as translation and quaternion vector part

P (array_like(3)) – 3D world point

- Returns

p, A, B

- Return type

ndarray(2), ndarray(2,6), ndarray(2,3)

For a world point \(\vec{x}\) compute the image plane projection and the sensitivity to camera and point change

\[\mat{A} = \frac{\partial \vec{f}(\vec{x})}{\partial \pose}, \mat{B} = \frac{\partial \vec{f}(\vec{x})}{\partial \vec{P}}\]where \(\vec{f}(\vec{x})\) is the perspective projection function.

- Seealso

- estpose(P, p, method='iterative', frame='world')[source]

Estimate object pose

- Parameters

P (ndarray(3, N)) – A set of 3D points defining the object with respect to its own frame

p (ndarray(2, N)) – Image plane projection of the object points

method (str, optional) – pose estimation algorithm, see OpenCV solvePnP, defaults to ‘iterative’

frame (str, optional) – estimate pose with respect to frame “world” [default] or “camera”

- Returns

pose of target frame relative to the world frame

- Return type

SE3

Using a set of points defining some object with respect to its own frame {B}, and a set of image-plane projections, estimate the pose of {B} with respect to the world or camera frame.

To estimate the camera’s pose with respect to the world frame the camera’s pose

self.poseis used.Note

All of the OpenCV estimation algorithms are supported.

Algorithm

"ippe-square"requires exactly four points at the corners of a square and in the order: (-x, y), (x, y), (x, -y), (-x, -y).

- Seealso